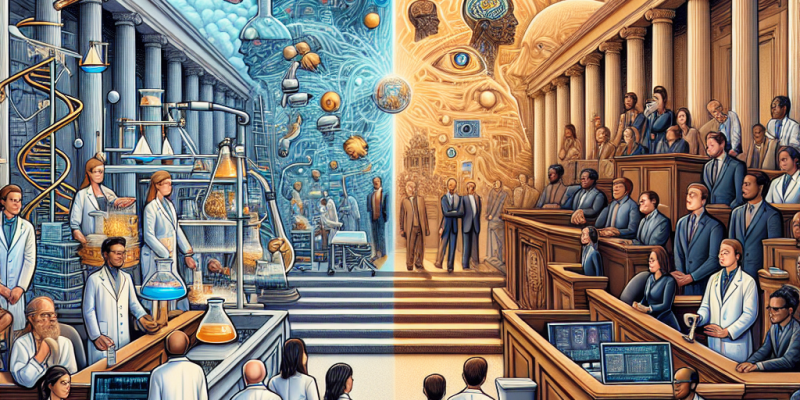

From Lab to Law: The Challenges of Regulating Emerging AI Technologies

As artificial intelligence (AI) continues to advance at a breakneck pace, the gap between technological development and regulatory frameworks has widened sharply. New AI applications are permeating our daily lives, from recommendation algorithms on streaming platforms to autonomous vehicles. However, the rapid deployment of these technologies raises critical questions about ethics, safety, and accountability. This article explores the challenges of regulating emerging AI technologies and the potential pathways forward.

The Technological Landscape

AI encompasses a broad range of technologies, including machine learning, natural language processing, and computer vision. These tools have the power to transform industries, enhance productivity, and lead to innovations previously thought impossible. However, with great power comes great responsibility.

Speed of Innovation

One of the primary challenges in regulating AI is the speed at which these technologies evolve. Traditional regulatory processes, often bogged down by bureaucracy and deliberation, are ill-suited to keep pace with rapid advancements. For instance, the introduction of generative AI models like ChatGPT has prompted discussions about their implications, but formal regulatory frameworks lag significantly behind the technology’s deployment.

Lack of Standardization

The field of AI lacks industry standards and consistent definitions. Different organizations and researchers may use varying methodologies to develop AI systems, leading to disparate outcomes in performance, accuracy, and ethical considerations. This lack of standardization complicates the creation of effective regulations, as lawmakers struggle to articulate criteria for evaluation and oversight.

Ethical Concerns

As AI technologies become more ubiquitous, ethical dilemmas arise. Issues such as bias in algorithms, privacy concerns, and the potential for surveillance and manipulation present a formidable challenge for regulators. High-profile cases, such as biased facial recognition software and data privacy violations, highlight the need for ethical oversight. However, defining ethical guidelines that can be universally applied poses significant challenges, as cultural, legal, and societal norms vary widely across borders.

The Global Regulatory Landscape

Regulation of AI is not simply a national concern but a global one. Different countries are adopting various approaches to AI governance. The European Union, for example, is known for its proactive stance, aiming to create a comprehensive regulatory framework through the proposed AI Act. This legislation seeks to classify AI systems based on their risk levels, providing a roadmap for compliance and accountability.

Conversely, many countries, including the United States, have taken a more fragmented approach, with state and federal agencies experimenting with regulation on a case-by-case basis. This lack of harmonization can create confusion for companies operating in multiple jurisdictions and hinder the agility required for innovation.

Balancing Innovation and Safety

Ultimately, the goal of any regulatory framework should be to foster innovation while ensuring safety and ethical considerations. Striking this balance is a formidable challenge. Overly stringent regulations could stifle innovation, driving talented researchers and companies to more permissive environments. Conversely, inadequate oversight could lead to harmful consequences, including public distrust in AI technologies.

Engaging stakeholders—such as tech companies, academic researchers, lawmakers, and civil society—in the regulatory process is crucial. Collaborative efforts can yield guidelines that address the concerns of all parties involved, fostering a regulatory environment that both supports innovation and protects public interest.

Technological Solutions to Regulatory Challenges

Interestingly, the very technologies that are creating regulatory challenges may also offer solutions. AI-driven tools can aid in monitoring compliance, assessing risks, and identifying biases in AI systems. By leveraging these tools, regulators can gain insights that inform their policies and regulations.

Emerging technologies, such as blockchain, could provide greater transparency in AI systems, allowing for more straightforward audits and accountability. Smart contracts could automate compliance checks, reducing the burden on businesses while ensuring adherence to regulatory requirements.

Conclusion

As AI technologies continue to advance and integrate into our lives, effective regulation becomes increasingly vital. The complexities involved in ensuring ethical AI development, protecting public safety, and fostering innovation require nuanced understanding and collaboration across multiple domains. By bridging the gap between the lab and the law, we can create a regulatory framework that not only addresses current challenges but also anticipates future developments, ensuring a beneficial coexistence of AI technologies and societal values.

As we navigate this uncharted territory, an ongoing dialogue among stakeholders will be essential to chart a course that balances innovation with responsibility, allowing the vast potential of AI to be realized in a manner that safeguards the public interest.